Using latent space regression to analyze and leverage compositionality in GANs

Lucy Chai

Jonas Wulff

Phillip Isola

MIT Computer Science and Artificial Intelligence Laboratory

International Conference on Learning Representations 2021

[Paper]

[Code]

[Poster]

[Colab]

[Bibtex]

Skip to:

[Abstract]

[Summary]

[Uncurated Samples]

Abstract:

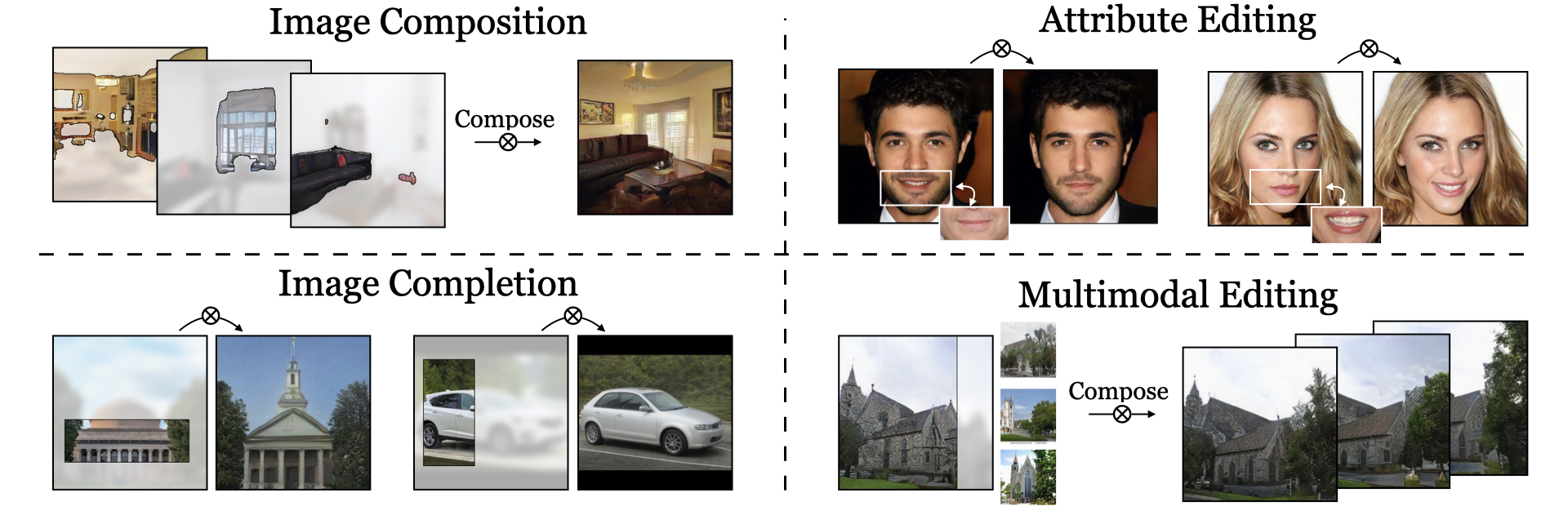

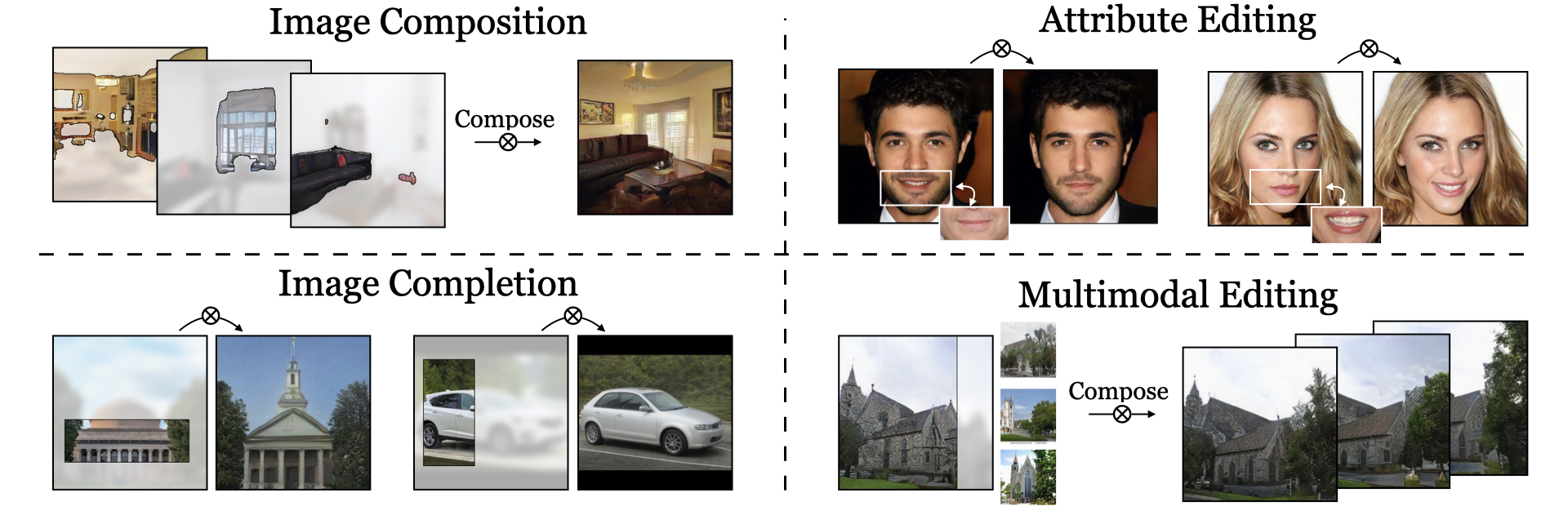

In recent years, Generative Adversarial Networks have become ubiquitous in both research and public perception, but how GANs convert an unstructured latent code to a high quality output is still an open question. In this work, we investigate regression into the latent space as a probe to understand the compositional properties of GANs. We find that combining the regressor and a pretrained generator provides a strong image prior, allowing us to create composite images from a collage of random image parts at inference time while maintaining global consistency. To compare compositional properties across different generators, we measure the trade-offs between reconstruction of the unrealistic input and image quality of the regenerated samples. We find that the regression approach enables more localized editing of individual image parts compared to direct editing in the latent space, and we conduct experiments to quantify this independence effect. Our method is agnostic to the semantics of edits, and does not require labels or predefined concepts during training. Beyond image composition, our method extends to a number of related applications, such as image inpainting or example-based image editing, which we demonstrate on several GANs and datasets, and because it uses only a single forward pass, it can operate in real-time.

Summary

We use a latent regressor network that learns from missing data for image composition and image completion. The combination of the regressor network and a pretrained GAN forms an image prior to create realistic images despite unrealistic input. This animation briefly demonstrates some applications of our method.

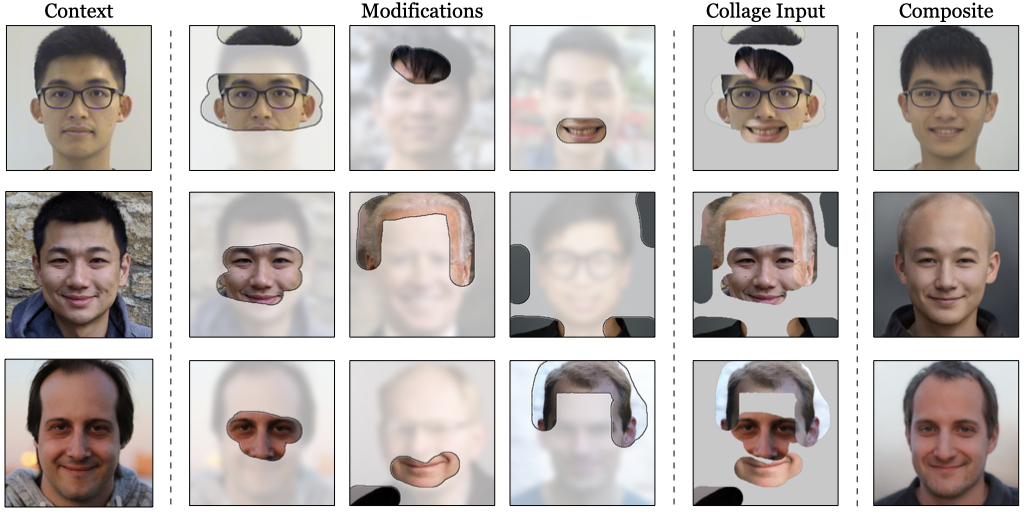

Using the latent regression and pretrained GAN, we can create automatic collages and merge them into coherent composite images.

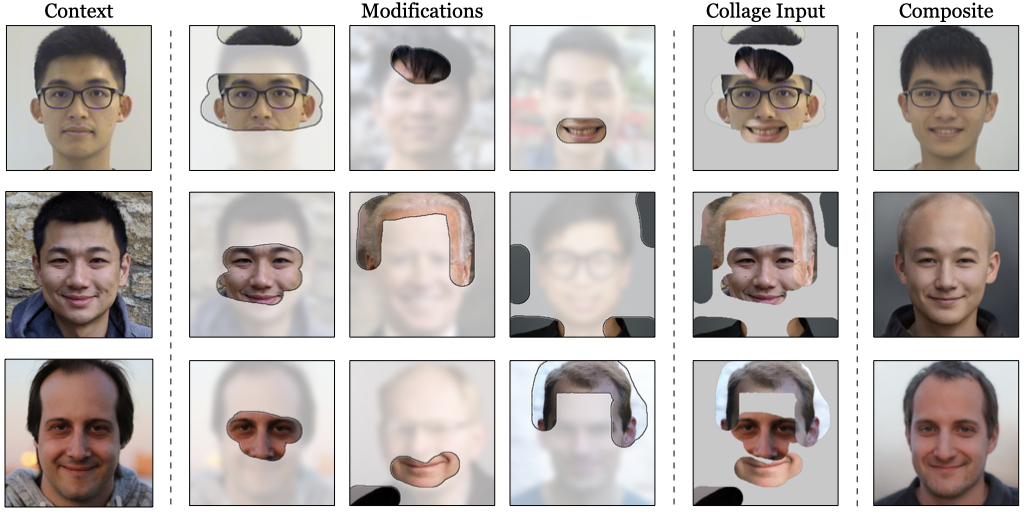

We demonstrate an application of editing and merging real images.

To better fit a specific image, we do a few seconds of initial optimization, and the remaining editing occurs in real-time.

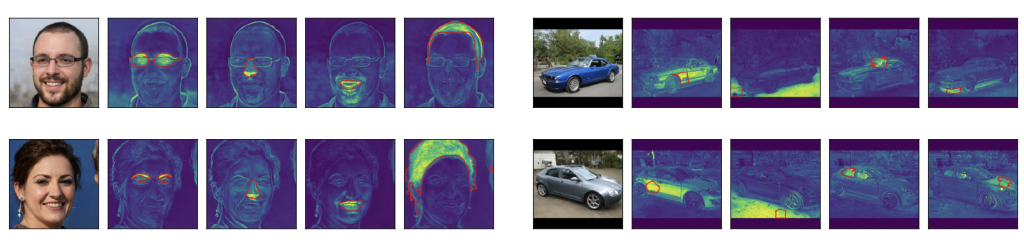

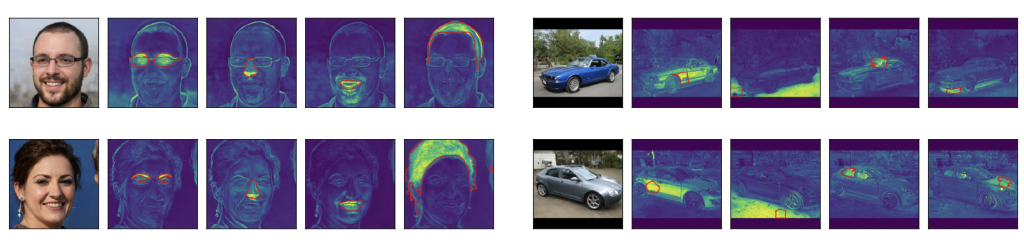

We use the latent regressor to investigate the independently changeable parts that the GAN learns from data. For example, we visualize what regions of a given image change, when the outlined red portion is modified. The resulting variations show regions of the images that commonly vary together, which can be interpreted as a form of unsupervised part discovery.

Additional Samples

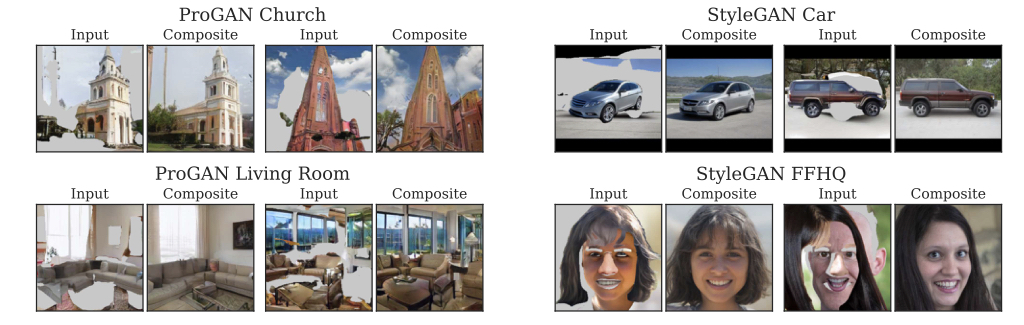

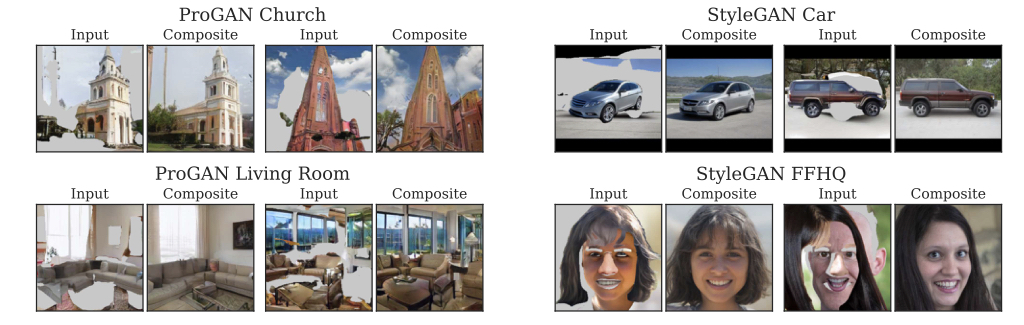

Click on the panels below to view uncurated composite images generated from randomly sampled image parts.

Click on the panels below to view comparisons of different image reconstruction methods operating on the same randomly sampled real image parts. Composition is a balance between unifying the input parts to create a realistic output, but still remaining close to the input parts; the methods exhibit a tradeoff of these two factors. A third axis is inference time. In the below webpages, methods that require additional per-image optimization are marked with (*), whereas the other methods operate using a single forward pass.

Reference

L Chai, J Wulff, P Isola. Using latent space regression to analyze and leverage compositionality in GANs.

International Conference on Learning Representations, 2021.

@inproceedings{chai2021latent,

title={Using latent space regression to analyze and leverage compositionality in GANs.},

author={Chai, Lucy and Wulff, Jonas and Isola, Phillip},

booktitle={International Conference on Learning Representations},

year={2021}

}

Acknowledgements:

We would like to thank David Bau, Richard Zhang, Tongzhou Wang, Luke Anderson, and Yen-Chen Lin for helpful discussions and feedback. Thanks to Antonio Torralba, Alyosha Efros, Richard Zhang, Jun-Yan Zhu, Wei-Chiu Ma, Minyoung Huh, and Yen-Chen Lin for permission to use their photographs. LC is supported by the National Science Foundation Graduate Research Fellowship under Grant No. 1745302. JW is supported by a grant from Intel Corp.

Recycling a familiar template ;).